Configuration

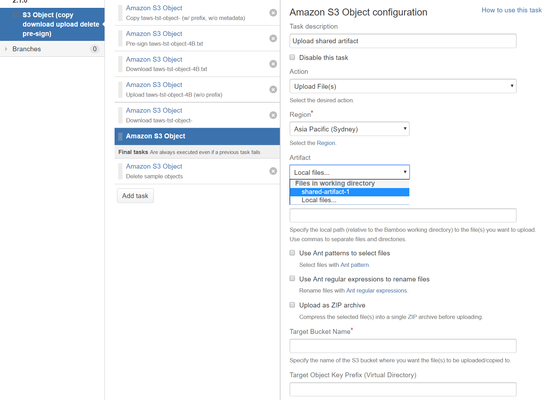

To configure an Amazon S3 Object task:

Navigate to the Tasks configuration tab for the job (this will be the default job if creating a new plan).

Click the name of an existing Amazon S3 Object task, or click Add Task and then Amazon S3 Object Task to create a new task.

Complete the following settings:

Common to all tasks

Actions supported by this task:

S3 Features

Most features offered by S3 are available by means of specifying additional Metadata fragments.

ZIP Archive Support

As of Tasks for AWS 2.9, you can compress files into a ZIP archive during uploading to ease using AWS services that support or require the deployment artifact to be a ZIP file - this is enabled by a new option Upload as ZIP archive for the Amazon S3 Object task's Upload File(s) action.

Upload File(s) | |

|---|---|

| Don't fail if nothing to upload | Check to not fail the build, if there is nothing to upload. Clear to trigger a build failure otherwise. |

| Artifact | Select the artifact you want to upload. |

| Source Local Path | Specify the local path (relative to the Bamboo working directory) to the files you want to upload. Use commas to separate files and directories. You can also use:

|

| Use default excludes when selecting files | Check to apply Ant default excludes when selecting files. Uncheck to ignore default excludes.

|

| Upload as ZIP archive | Check to compress the selected file(s) into a single ZIP archive before uploading. Uncheck to upload separately. |

| Target Bucket Name | Specify the name of the S3 bucket where you want the files to be uploaded to. |

| Target Object Key Prefix (Virtual Directory) | (Optional) Specify the S3 object key prefix you want the uploaded files to gain in the target bucket. |

| Metadata Configuration | (Optional) Specify additional metadata in JSON format. Insert fragments from the inline Examples dialog to get started.

|

| Tags | (Optional) Specify tags to apply to the resulting object(s) in JSON format. Insert fragments from the inline Examples dialog to get started.

|

Download Object(s) | |

| Don't fail if nothing to download | Check to not fail the build, if there is nothing to download. Clear to trigger a build failure otherwise. |

| Source Bucket Name | Specify the name of the S3 bucket where you want the objects to be downloaded from. |

| Source Object Key Prefix | Specify the key prefix of the S3 objects you want to download from the source bucket. |

| Target Local Path | (Optional) Specify the local path (relative to the working directory) where the objects will be downloaded to. |

Delete Object(s) | |

| Don't fail if nothing to delete | Check to not fail the build, if there is nothing to delete. Clear to trigger a build failure otherwise. |

| Source Bucket Name | see Download above |

| Source Object Key Prefix | see Download above |

Copy Object(s) | |

| Don't fail if nothing to copy | Check to not fail the build, if there is nothing to copy. Clear to trigger a build failure otherwise. |

| Source Bucket Name | see Download above |

| Source Object Key Prefix | see Download above |

| Target Bucket Name | see Upload above |

| Target Object Key Prefix (Virtual Directory) | see Upload above |

| Metadata Configuration | see Upload above |

| Tags | (Optional) Specify tags to apply to the resulting object(s) in JSON format. Insert fragments from the inline Examples dialog to get started.

|

Generate Pre-signed URL | Use S3 to provide configuration as code The Generate Pre-signed URL action allows using S3 objects to provide configuration as code, refer to Injecting task configuration via URLs for details. |

| Bucket Name | Specify the name of the S3 bucket the URL should target. |

| Object Key | Specify the key of the S3 object the URL should target. |

| Method | Select the HTTP method the URL is supposed to be used with.

|

| Expiration | Specify for how long the URL should be valid (seconds). |

Variables

All tasks support Bamboo Variable Substitution/Definition - this task's actions generate variables as follows:

Upload File(s)

Creating resource variables for uploaded object 'prefix/taws-tst-object-4B.txt': ... bamboo.custom.aws.s3.object.first.BucketName: taws-tst-target-us-east-1 ... bamboo.custom.aws.s3.object.first.ETag: 1dafad37f6d9e169248bacb8485fd9cc ... bamboo.custom.aws.s3.object.first.ObjectKey: prefix/taws-tst-object-4B.txt ... bamboo.custom.aws.s3.object.first.VersionId: null

Download Object(s)

N/A

Delete Object(s)

N/A

Copy Object(s)

Generate Pre-signed URL

How-to Articles

Frequently Asked Questions (FAQ)

Atlassian account required